Journal, Conference and Workshop Publications

[ACS 2022] Kondrakunta, S., Gogineni, V. R., & Cox, M. T. (In press). Agent Goal Management using Goal Operations. In the Tenth Advances in Cognitive Systems Conference-2022.. Cognitive Systems Foundation.

[ACS 2022] Gogineni, V. R., Kondrakunta, S., & Cox, M. T. (In press). Multi-agent Goal Delegation. In the Tenth Advances in Cognitive Systems Conference-2022.. Cognitive Systems Foundation.

[GRW 2021] Kondrakunta, S., Gogineni, V. R., & Cox, M. T. (2021, December). Agent Goal Management using Goal Operations. In the Ninth Goal Reasoning Workshop at Advances in Cognitive Systems Conference-2021. Cognitive Systems Foundation.

[GRW 2021] Gogineni, V. R., Kondrakunta, S., & Cox, M. T. (2021, December). Multi-agent Goal Delegation. In the Ninth Goal Reasoning Workshop at Advances in Cognitive Systems Conference-2021. Cognitive Systems Foundation.

[ACS 2021] Yuan, W., Munoz-Avila, H., Gogineni, V. R., Kondrakunta, S., Cox, M. T., & He, L., (in press). Task Modifiers for HTN Planning and Acting. In the poster presentation at the Ninth Annual Conference on Advances in Cognitive Systems. Cognitive Systems Foundation.

[ACS 2021] Kondrakunta, S., Gogineni, V. R., Cox, M. T., Coleman, D., Tan, X., Lin, T., Hou, M., Zhang, F., McQuarrie, F., & Edwards, C. (In press). The Rational Selection of Goal Operations and the Integration of Search Strategies with Goal-Driven Marine Autonomy. In the Ninth Annual Conference on Advances in Cognitive Systems. Cognitive Systems Foundation.

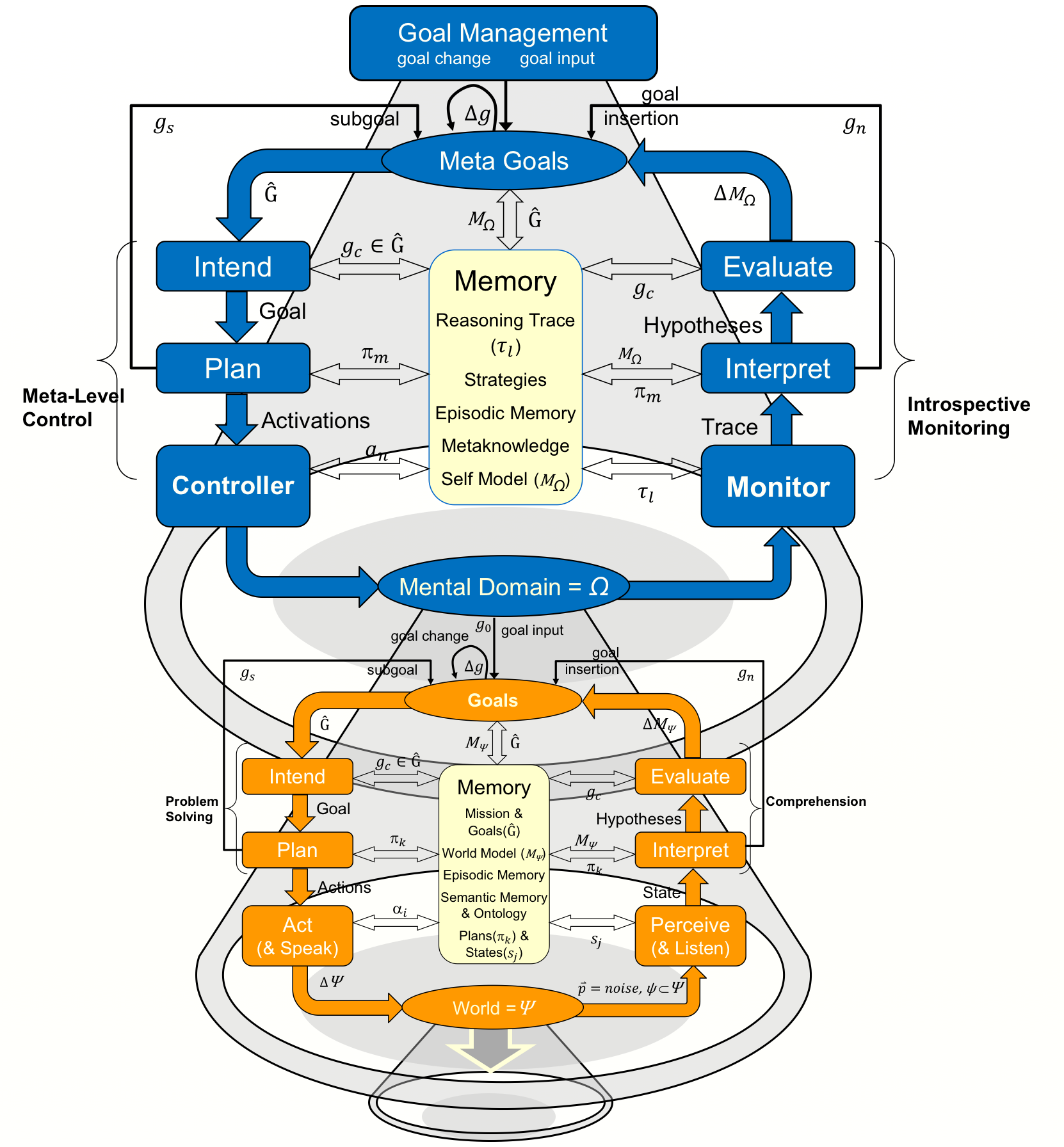

[ACS 2021] Cox, M. T., Mohammad, Z., Kondrakunta, S., Gogineni, V. R., Dannenhauer, D., & Larue, O. (In press). Computational Metacognition. In the Ninth Annual Conference on Advances in Cognitive Systems. Cognitive Systems Foundation.

[ACC 2021] Kondrakunta, S., & Gogineni, V. R., Molineaux, M., & Cox, M. T. (In press). Problem recognition, explanation and goal formulation. In the Fifth International Conference on Applied Cognitive Computing (ACC). Springer.

[ACC 2021] Kondrakunta, S., & Cox, M. T. (In press). Autonomous Goal Selection Operations for Agent-Based Architectures. In the Fifth International Conference on Applied Cognitive Computing (ACC). Springer.

[FLAIRS 2020] Gogineni, V., Kondrakunta, S., Molineaux, M., & Cox, M. T. (2020, May). Case-Based Explanations and Goal Specific Resource Estimations. In the Thirty-Third Florida Artificial Intelligence Research Society Conference, North America (pp.407-412). AAAI Press.

[MIDCA Workshop 2019] Dannenhauer, D., Schmitz, S., Eyorokon, V., Gogineni, V. R., Kondrakunta, S., Williams, T., & Cox, M. T. (2019). MIDCA Version 1.4: User manual and tutorial for the Metacognitive Integrated Dual-Cycle Architecture (Tech. Rep. No. COLAB2-TR-3). Dayton, OH: Wright State University, Collaboration and Cognition Laboratory.

[ACS 2019] Kondrakunta, S., Gogineni, V. R., Brown, D., Molineaux, M., & Cox, M. T. (2019). Problem recognition, explanation and goal formulation. In Proceedings of the Seventh Annual Conference on Advances in Cognitive Systems (pp. 437-452). Cognitive Systems Foundation.

[ICCBR 2019] Gogineni, V. R.,Kondrakunta, S., Brown, D., Molineaux, M., & Cox, M. T. (2019, September). Probabilistic Selection of Case-Based Explanations in an Underwater Mine Clearance Domain. In International Conference on Case-Based Reasoning (pp. 110-124). Springer, Cham.

[GRW: IJCAI 2018] Kondrakunta, S., Gogineni, V. R., Molineaux, M., Munoz-Avila, H., Oxenham, M., & Cox, M. T. (2018). Toward problem recognition, explanation and goal formulation. In Working Notes of the 2018 IJCAI/FAIM Goal Reasoning Workshop, Stockholm, Sweden. IJCAI

[XCBR: ICCBR 2018] Gogineni, V., Kondrakunta, S., Molineaux, M., & Cox, M. T. (2018). Application of case-based explanations to formulate goals in an unpredictable mine clearance domain. In Proceedings of the ICCBR-2018 Workshop on Case-Based Reasoning for the Explanation of Intelligent Systems, Stockholm, Sweden (pp. 42-51). Springer, Cham.

[GRW: IJCAI 2017] Dannenhauer, D., Munoz-Avila, H., & Kondrakunta, S. Goal-Driven Autonomy Agents with Sensing Costs. In Working Notes of the 2017 IJCAI Goal Reasoning Workshop, Melbourne, Australia. IJCAI.

[GRW: IJCAI 2017] Kondrakunta, S., & Cox, M. T. (2017, July). Autonomous goal selection operations for agent-based architectures. In Working Notes of the 2017 IJCAI Goal Reasoning Workshop, Melbourne, Australia. IJCAI.

[AAAI 2017] Cox, M., Dannenhauer, D., & Kondrakunta, S. (2017, February). Goal operations for cognitive systems. In Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 31, No. 1). AAAI Press.

[IEEE 2015] Kishore, P. V. V., Rahul, R., Kondrakunta, S., & Sastry, A. S. C. S. (2015, August). Crowd density analysis and tracking. In 2015 International Conference on Advances in Computing, Communications and Informatics (ICACCI) (pp. 1209-1213). IEEE.